The RS/6000 Virtual Address space is partitioned into segments (see Appendix C, Cache and Addressing Considerations, for an extensive discussion of the virtual-addressing structure). A segment is a 256MB, contiguous portion of the virtual-memory address space into which a data object can be mapped. Process addressability to data is managed at the segment (or object) level so that a segment can be shared between processes or maintained as private. For example, processes can share code segments yet have separate and private data segments.

The following sections describe various aspects of the Virtual Memory Manager (VMM):

Virtual-memory segments are partitioned into fixed-size units called pages. In AIX, the page size is 4096 bytes. Each page in a segment can be in real memory (RAM), or stored on disk until it is needed. Similarly, real memory is divided into 4096-byte page frames. The role of the VMM is to manage the allocation of real-memory page frames and to resolve references by the program to virtual-memory pages that are not currently in real memory or do not yet exist (for example, when a process makes the first reference to a page of its data segment).

Since the amount of virtual memory that is in use at any given instant may be larger than real memory, the VMM must store the surplus on disk. From the performance standpoint, the VMM has two, somewhat opposed, objectives:

In pursuit of these objectives, the VMM maintains a free list of page frames that are available to satisfy a page fault. The VMM uses a page-replacement algorithm to determine which virtual-memory pages currently in memory will have their page frames reassigned to the free list. The page-replacement algorithm uses several mechanisms:

The following sections describe the free list and the page-replacement mechanisms in more detail.

The VMM maintains a list of free page frames that it uses to accommodate page faults. In most environments, the VMM must occasionally add to the free list by reassigning some page frames owned by running processes. The virtual-memory pages whose page frames are to be reassigned are selected by the VMM's page-replacement algorithm. The number of frames reassigned is determined by the VMM thresholds.

In AIX Version 3, the contents of page frames are not lost when the page frames are reassigned to the free list. If a virtual-memory page is referenced before the frame it occupies is actually used to satisfy a page fault, the frame is removed from the free list and reassigned to the faulting process. This is phenomenon is termed a reclaim. Reclaiming is not supported in AIX Version 4.

The pages of a persistent segment have permanent storage locations on disk. Files containing data or executable programs are mapped to persistent segments. Since each page of a persistent segment has a permanent disk storage location, the VMM writes the page back to that location when the page has been changed and can no longer be kept in real memory. If the page has not changed, its frame is simply reassigned to the free list. If the page is referenced again later, a new copy is read in from its permanent disk-storage location.

Working segments are transitory, exist only during their use by a process, and have no permanent disk-storage location. Process stack and data regions are mapped to working segments, as are the kernel text segment, the kernel-extension text segments and the shared-library text and data segments. Pages of working segments must also have disk-storage locations to occupy when they cannot be kept in real memory. The disk paging space is used for this purpose.

The figure "Persistent and Working Storage Segments" illustrates the relationship between some of the types of segment and the locations of their pages on disk. It also shows the actual (arbitrary) locations of the pages when they are in real memory.

There are further classifications of the persistent-segment types. Client segments are used to map remote files (for example, files that are being accessed via NFS), including remote executables. Pages from client segments are saved and restored over the network to their permanent file location, not on the local-disk paging space. Journaled and deferred segments are persistent segments that must be atomically updated. If a page from a journaled or deferred segment is selected to be removed from real memory (paged out), it must be written to disk paging space unless it is in a state that allows it to be committed (written to its permanent file location).

Computational memory consists of the pages that belong to working-storage segments or program text segments. (A segment is considered to be a program text segment if an instruction cache miss occurs on any of its pages.) File memory consists of the remaining pages.

A page fault is considered to be either a new page fault or a repage fault. A new page fault occurs when there is no record of the page having been referenced recently. A repage fault occurs when a page that is known to have been referenced recently is referenced again, and is not found in memory because the page has been replaced (and perhaps written to disk) since it was last accessed. A perfect (clairvoyant) page-replacement policy would eliminate repage faults entirely (assuming adequate real memory) by always stealing frames from pages that are not going to be referenced again. Thus, the number of repage faults is an inverse measure of the effectiveness of the page-replacement algorithm in keeping frequently reused pages in memory, thereby reducing overall I/O demand and potentially improving system performance.

In order to classify a page fault as new or repage, the VMM maintains a repage history buffer that contains the page IDs of the N most recent page faults, where N is the number of frames that the memory can hold. For example, a 16MB memory requires a 4096-entry repage history buffer. At page in, if the page's ID is found in the repage history buffer, it is counted as a repage. Also, the VMM estimates the computational-memory repaging rate and the file-memory repaging rate separately by maintaining counts of repage faults for each type of memory. The repaging rates are multiplied by 0.9 each time the page-replacement algorithm runs, so that they reflect recent repaging activity more strongly than historical repaging activity.

Several numerical thresholds define the objectives of the VMM. When one of these thresholds is breached, the VMM takes appropriate action to bring the state of memory back within bounds. This section discusses the thresholds that can be altered by the system administrator via the vmtune command.

The number of page frames on the free list is controlled by:

| minfree | Minimum acceptable number of real-memory page frames in the free list. When the size of the free list falls below this number, the VMM begins stealing pages. It continues stealing pages until the size of the free list reaches maxfree. |

| maxfree | Maximum size to which the free list will grow by VMM page stealing. The size of the free list may exceed this number as a result of processes terminating and freeing their working-segment pages or the deletion of files that have pages in memory. |

The VMM attempts to keep the size of the free list greater than or equal to minfree. When page faults and/or system demands cause the free list size to fall below minfree, the page-replacement algorithm is run. The size of the free list must be kept above a certain level (the default value of minfree) for several reasons. For example, the AIX sequential-prefetch algorithm requires several frames at a time for each process that is doing sequential reads. Also, the VMM must avoid deadlocks within the operating system itself, which could occur if there were not enough space to read in a page that was required in order to free a page frame.

The following thresholds are expressed as percentages. They represent the fraction of the total real memory of the machine that is occupied by file pages--pages of noncomputational segments.

| minperm | If the percentage of real memory occupied by file pages falls below this level, the page-replacement algorithm steals both file and computational pages, regardless of repage rates. |

| maxperm | If the percentage of real memory occupied by file pages rises above this level, the page-replacement algorithm steals only file pages. |

When the percentage of real memory occupied by file pages is between minperm and maxperm, the VMM normally steals only file pages, but if the repaging rate for file pages is higher than the repaging rate for computational pages, computational pages are stolen as well.

The main intent of the page-replacement algorithm is to ensure that computational pages are given fair treatment; for example, the sequential reading of a long data file into memory should not cause the loss of program text pages that are likely to be used again soon. The page-replacement algorithm's use of the thresholds and repaging rates ensures that both types of pages get treated fairly, with a slight bias in favor of computational pages.

When a process references a virtual-memory page that is on disk, because it either has been paged out or has never been read, the referenced page must be paged in and, on average, one or more pages must be paged out, creating I/O traffic and delaying the progress of the process.

AIX attempts to steal real memory from pages that are unlikely to be referenced in the near future, via the page-replacement algorithm. A successful page-replacement algorithm allows the operating system to keep enough processes active in memory to keep the CPU busy. But at some level of competition for memory--depending on the total amount of memory in the system, the number of processes, the time-varying memory requirements of each process, and the page-replacement algorithm--no pages are good candidates for paging out to disk because they will all be reused in the near future by the active set of processes.

When this happens, continuous paging in and paging out occurs. This condition is called thrashing. Thrashing results in incessant I/O to the paging disk and causes each process to encounter a page fault almost as soon as it is dispatched, with the result that none of the processes make any significant progress. The most pernicious aspect of thrashing is that, although thrashing may have been triggered by a brief, random peak in workload (such as all of the users of a system happening to hit the Enter key in the same second), the system may continue thrashing for an indefinitely long time.

AIX has a memory load control algorithm that detects when the system is starting to thrash and then suspends active processes and delays the initiation of new processes for a period of time. Five parameters set rates and bounds for the algorithm. The default values of these parameters have been chosen to "fail safe" across a wide range of workloads. For special situations, a mechanism for tuning (or disabling) load control is available (see Tuning VMM Memory Load Control).

The memory load control mechanism assesses, once a second, whether sufficient memory is available for the set of active processes. When a memory overcommitment condition is detected, some processes are suspended, decreasing the number of active processes and thereby decreasing the level of memory overcommitment. When a process is suspended, all of its threads are suspended when they reach a suspendable state. The pages of the suspended processes quickly become stale and are paged out via the page replacement algorithm, releasing enough page frames to allow the remaining active processes to progress. During the interval in which existing processes are suspended, newly created processes are also suspended, preventing new work from entering the system. Suspended processes are not reactivated until a subsequent interval passes during which no potential thrashing condition exists. Once this safe interval has passed, the threads of the suspended processes are gradually reactivated.

Memory load control parameters specify: the system memory overcommitment threshold; the number of seconds required to make a safe interval; the individual process's memory overcommitment threshold by which an individual process is qualified as a suspension candidate; the minimum number of active processes when processes are being suspended; and the minimum number of elapsed seconds of activity for a process after reactivation.

These parameters and their default values (shown in parentheses) are:

| h | High memory-overcommitment threshold (6) |

| w | Wait to reactivate suspended processes (1 second) |

| p | Process memory-overcommitment threshold (4) |

| m | Minimum degree of multiprogramming (2) |

| e | Elapsed time exempt from suspension (2 seconds) |

All parameters are positive integer values.

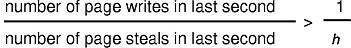

The h parameter controls the threshold defining memory overcommitment. Memory load control attempts to suspend processes when this threshold is exceeded during any one-second period. The threshold is a relationship between two direct measures: the number of pages written to paging space in the last second, and the number of page steals occurring in the last second. The number of page writes is usually much less than the number of page steals. Memory is considered overcommitted when:

As this fraction increases, thrashing becomes more likely. The default value of 6 for h means that the system is considered to be likely to thrash when the fraction of page writes to page steals exceeds 17%. A lower value of h (which can be as low as zero--the test is made without an actual division) raises the thrashing detection threshold; that is, the system is allowed to come closer to thrashing before processes are suspended. The above fraction was chosen as a thrashing threshold because it is comparatively configuration-independent. Regardless of the disk paging capacity and the number of megabytes of memory installed in the system, when the above fraction is low, thrashing is unlikely. For values near 1.0, thrashing is certain. Any period of time in which memory is not overcommitted we define as a safe period.

The w parameter controls the number of one-second intervals during which the above fraction must remain below 1/h before suspended processes are reactivated. The default value of one second is close to the minimum value allowed, zero. A value of one second aggressively attempts to reactivate processes as soon as a one-second safe period has occurred. Large values of w run the risk of unnecessarily poor response times for suspended processes, while the processor is idle for lack of active processes to run.

The p parameter determines whether a process is eligible for suspension. Analogous to the h parameter, the p parameter is used to set a threshold for the ratio of two measures that are maintained for every process. The two measures are the number of repages (defined in the earlier section on page replacement) that the process has accumulated in the last second and the number of page faults that the process has accumulated in the last second. A high ratio of repages to page faults means the individual process is thrashing. A process is considered eligible for suspension (it is thrashing or contributing to overall thrashing) when:

The default value of p is 4, meaning that a process is considered to be thrashing (and a candidate for suspension) when the fraction of repages to page faults over the last second is greater than 25%. A low value of p (which can be as low as zero--the test is made without an actual division) results in a higher degree of individual process thrashing being allowed before a process is eligible for suspension. A value of zero means that no process can be suspended by memory load control.

The m parameter determines a lower limit for the degree of multiprogramming. The degree of multiprogramming is defined as the number of active (not suspended) processes. (Each process is counted as one, regardless of the number of threads running in it.) Excluded from the count are the kernel process and processes with (1) fixed priorities with priority values less than 60, (2) pinned memory or (3) awaiting events, since no process in these categories is ever eligible for suspension. The default value of 2 ensures that at least two user processes are always able to be active.

Lower values of m, while allowed, mean that at times as few as one user process may be active. High values of m effectively defeat the ability of memory load control to suspend processes. This parameter is very sensitive to configuration and workload. Too small a value of m in a large configuration results in overly aggressive suspension; too large a value of m for a small-memory configuration does not allow memory load control to be aggressive enough. The default value of 2 is a fail-safe value for small-memory configurations; it is likely to be suboptimal for large configurations in which many tens of processes can and should be active to exploit available resources.

For example, if one knows that for a particular configuration and a particular workload, approximately 25 concurrent processes can successfully progress, while more than 25 concurrent processes run the risk of thrashing, then setting m to 25 may be a worthwhile experiment.

Each time a suspended process is reactivated, it is exempt from suspension for a period of e elapsed seconds. This is to ensure that the high cost (in disk I/O) of paging in a suspended process's pages results in a reasonable opportunity for progress. The default value of e is 2 seconds.

Once per second, the scheduler (process 0) examines the values of all the above measures that have been collected over the preceding one-second interval, and determines if processes are to be suspended or activated. If processes are to be suspended, every process eligible for suspension by the p and e parameter test is marked for suspension. When that process next receives the CPU in user mode, it is suspended (unless doing so would reduce the number of active processes below m). The user-mode criterion is applied so that a process is ineligible for suspension during critical system activities performed on its behalf. If, during subsequent one-second intervals, the thrashing criterion is still being met, additional process candidates meeting the criteria set by p and e are marked for suspension. When the scheduler subsequently determines that the safe-interval criterion has been met and processes are to be reactivated, some number of suspended processes are put on the run queue (made active) every second.

Suspended processes are reactivated (1) by priority and (2) by the order in which they were suspended. The suspended processes are not all reactivated at once. A value for the number of processes reactivated is selected by a formula that recognizes the number of then-active processes and reactivates either one-fifth of the number of then-active processes or a monotonically increasing lower bound, whichever is greater. This cautious strategy results in increasing the degree of multiprogramming roughly 20% per second. The intent of this strategy is to make the rate of reactivation relatively slow during the first second after the safe interval has expired, while steadily increasing the reintroduction rate in subsequent seconds. If the memory-overcommitment condition recurs during the course of reactivating processes, reactivation is halted; the "marked to be reactivated" processes are again marked suspended; and additional processes are suspended in accordance with the above rules.

The six parameters of the memory-load-control facility can be set by the system administrator via the schedtune command. Techniques for tuning the memory-load-control facility are described in Chapter 7, "Monitoring and Tuning Memory Use."

AIX supports two schemes for allocation of paging-space slots. Under the normal, late-allocation algorithm, a paging slot is allocated to a page of virtual memory only when that page is first read from or written into. That is the first time that the page's content is of interest to the executing program.

Many programs exploit late allocation by allocating virtual-memory address ranges for maximum-sized structures and then only using as much of the structure as the situation requires. The pages of the virtual-memory address range that are never accessed never require real-memory frames or paging-space slots.

This technique does involve some degree of risk. If all of the programs running in a machine happened to encounter maximum-size situations simultaneously, paging space might be exhausted. Some programs might not be able to continue to completion.

The second AIX paging-space-slot-allocation scheme is intended for use in installations where this situation is likely, or where the cost of failure to complete is intolerably high. Aptly called early allocation, this algorithm causes the appropriate number of paging-space slots to be allocated at the time the virtual-memory address range is allocated, for example, with malloc. If there are not enough paging-space slots to support the malloc, an error code is set. The early-allocation algorithm is invoked with:

export PSALLOC=early

This causes all future programs execed in the environment to use early allocation. It does not affect the currently executing shell.

Early allocation is of interest to the performance analyst mainly because of its paging-space size implications. Many existing programs make use of the "malloc a lot, use what you need" technique. If early allocation is turned on for those programs, paging-space requirements can increase many fold. Whereas the normal recommendation for paging-space size is at least twice the size of the system's real memory, the recommendation for systems that use PSALLOC=early is at least four times real memory size. Actually, this is just a starting point. You really need to analyze the virtual storage requirements of your workload and allocate paging spaces to accommodate them. As an example, at one time the AIXwindows server required 250MB of paging space when run with early allocation.

You should remember, too, that paging-space slots are only released by process (not thread) termination or by the disclaim system call. They are not released by free.

See Placement and Sizes of Paging Spaces for more information on paging space allocation and monitoring.